How to configure Load Balancer

Overview

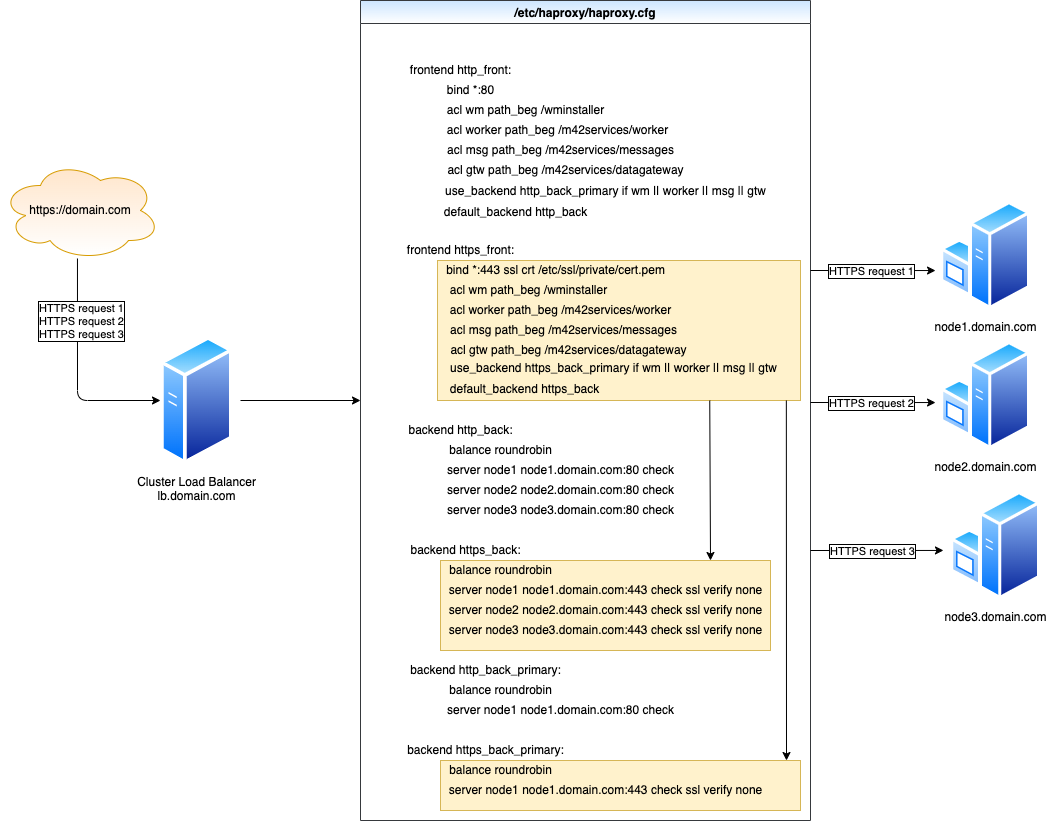

The objective of the topic is to provide a basic Load Balancer configuration to handle the distribution of web traffic between multiple web servers. While accessing the main Matrix42 Software URL, the load balancer should step in and relay traffic to each of the available nodes within the cluster, allowing us to grow capacity to serve more clients without asking those clients to connect to each node directly.

There is a bunch of well-known load balancing tools/software available on the market. Below we will be focusing on a HAProxy which has solid performance indicators and simplicity during configuration.

HAProxy is officially available at https://www.haproxy.com/

Use this video tutorial as a reference:

HAProxy installation

Depending on the distributive and its version installed on the target environment (Debian or Ubuntu), the steps of installation may slightly vary. It is recommended to look at the following wizard https://haproxy.debian.net/ to ensure the installation steps precisely fit a target environment.

For the next installation steps given below, we must consider the following assumptions:

-

The installation of HAProxy goes to Ubuntu 20.04 LTS (HAProxy latest version 2.5-stable at the moment of writing this article);

-

At least 3 nodes (we determine node 1 as a primary node) with the product installed are available. They must be accessible via node1.domain.com, node2.domain.com, node3.domain.com (just for the sample provided below);

-

Self-sign certificate must be generated on all the nodes. Alternatively, a standard existing certificate can be used as well. The certificate must be placed to

/etc/ssl/private/cert.pem oncethe HAProxy installation is complete.

To install HAProxy, the following steps must be executed:

-

Run the following command to install HAProxy

sudo apt-get install --no-install-recommends software-properties-common

sudo add-apt-repository ppa:vbernat/haproxy-2.5

sudo apt-get install haproxy=2.5.\*

-

Navigate to HAProxy configuration file:

sudo vi /etc/haproxy/haproxy.cfg

-

Modify

haproxy.cfgby putting the following configuration to the end of the file:

### Frontend HTTP and HTTPS listeners ###

frontend http_front

bind *:80

acl wm path_beg /wminstaller

acl worker path_beg /m42services/worker

acl msg path_beg /m42services/messages

acl gtw path_beg /m42services/datagateway

use_backend http_back_primary if wm || worker || msg || gtw

default_backend http_back

frontend https_front

bind *:443 ssl crt /etc/ssl/private/cert.pem

acl wm path_beg /wminstaller

acl worker path_beg /m42services/worker

acl msg path_beg /m42services/messages

acl gtw path_beg /m42services/datagateway

use_backend https_back_primary if wm || worker || msg || gtw

default_backend https_back

### Backend HTTP and HTTPS listeners ###

backend http_back

balance roundrobin

server node1 node1.domain.com:80 check

server node2 node2.domain.com:80 check

server node3 node3.domain.com:80 check

backend https_back

balance roundrobin

server node1 node1.domain.com:443 check ssl verify none

server node2 node2.domain.com:443 check ssl verify none

server node3 node3.domain.com:443 check ssl verify none

backend http_back_primary

balance roundrobin

server node1 node1.domain.com:80 check

backend https_back_primary

balance roundrobin

server node1 node1.domain.com:443 check ssl verify none

-

Save and restart HAProxy service:

sudo service haproxy restart

Once the configuration is complete, verify it by navigating to domain.com/stats to see if all sections have got applied and are ready to accept connections from outside.

The load balancer matches incoming connections with the frontend listeners:

- Unsecured HTTP connections go to

http_front - Secured HTTPS are getting processed by

https_front

Each of these listeners must contain a corresponding backend section to enable the load balancer to consequentially distribute incoming requests through all the nodes.

The naming for the sections and rules are given just for the sample. For the real system configuration, the naming can be changed and presented in a more meaningful and descriptive way.

Depending on the pre-configured balancing strategy, the distribution may vary. Here we set up roundrobin as an algorithm we intend to use while splitting the load across the nodes in the farm.

Roundrobin load balancing is a simple way to distribute client requests across a group of servers. A client request is forwarded to each server in turn. When it reaches the end of the list, the algorithm of roundrobin loops back and goes down through the list of nodes again starting from the first node.

Due to system limitation to process the data on all the nodes consistently, the following exception rules must be created to forward the traffic from particular URLs to one single primary node:

-

wm: URL path starts from

/wminstaller -

worker: URL path starts from

/m42services/worker -

msg URL path starts from

/m42services/messages -

gtw URL path starts from

/m42servcies/datagateway

Dedicated http_back_primary and https_back_primary backends must be configured to handle specific requests and forward them to the primary node only (node 1 in the current context).

Automatic health check validation is another benefit the load balancer provides. In the current configuration, we enable it using a check argument at the end of each server in the list. Unreachable nodes are getting bypassed until not available again. Using this way, we consider the entire system as highly available until at least one single node is alive and able to accept requests.

Consider we execute multiple HTTPS requests to load balancer, so then we come up to the following request flow like shown below. All subsequent requests are going to be processed one by one in a circle tripping across the nodes.

While extending the cluster to reinforce its capacity, additional nodes must be allocated and added to the haproxy.cfg accordingly. Both sections http_back and https_back are intended to host the entire set of nodes included in the farm.

It is strongly recommended to configure the load balancer using one single primary node and verify if the system performs well. Furthermore, it becomes a prerequisite while installing additional nodes to the cluster.